Clickbait Power Play: "Rust uses 12 times less CPU and 486 times more memory efficient than Java" - JVM Weekly vol. 108

Today - about "Clickbaits".

1. Clickbait Power Play: "Rust is 12 times less CPU and 486 times more memory efficient than Java"

Rust is coming, and it’s seemingly going to eat us all.

But before we dive into the mentioned clickbait, let's start with the real stuff. Java Bindings for Rust: A Comprehensive Guide is an in-depth manual that walks through integrating Rust with Java step-by-step. It covers strategies for managing interoperability issues between the two languages in applications, with practical tips on optimization and common pitfalls.

The guide explains how to leverage the Java FFM API (Foreign Function and Memory API), enabling efficient memory management and Rust function calls from within Java applications. Using classes like SymbolLookup, FunctionDescriptor, Linker, MethodHandle, Arena, and MemorySegment, developers can call Rust methods based on the C ABI. The Application Binary Interface (ABI) defines how a program's code communicates at a binary level with external functions or libraries, detailing aspects like how arguments are passed, registers managed, and values returned. By following the same ABI, Rust enables Java developers to call Rust functions through JNI as if they were native C functions.

The manual includes practical examples, from calling Rust functions from Java to managing data lifecycle and memory. In advanced sections, it covers thread safety, ownership, and borrowing rules in Rust from a Java perspective, and handling complex data types.

Now that we’ve covered "the brains", let’s get to the clickbait.

The article Delivering Superior Banking Experiences - How our customer-centric approach led us to Rust by Gilang Kusuma J. describes how CIMB Niaga, one of Southeast Asia's largest banks, migrated core banking microservices from Java to Rust to improve the performance of its digital platforms, OCTO Mobile and OCTO Clicks. The goal of this migration was to significantly reduce resource consumption while providing users with a more responsive and reliable experience. The developers claim Rust has led to impressive results:

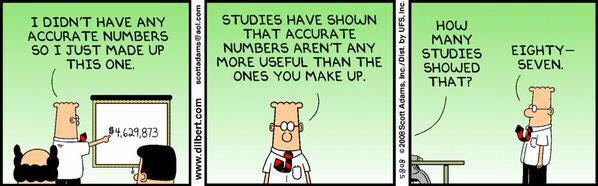

Utilization in our internal authentication service was reduced from 3 cores and 3.8 GB in the Java service to 0.25 cores and 8 MB in the Rust service, which is 12 times less CPU and 486 times more memory efficient.

The Java Virtual Machine (JVM) incurs some memory overhead due to features like garbage collection (GC), Just-In-Time (JIT) compilation, and helper threads. Basic overhead includes about 128 MB for metadata and structures needed for JIT, allowing faster execution of frequently used code parts. Adding GC can increase overhead by an additional 10% of heap size, depending on app size and workload. The GC mechanism also needs one CPU core to function optimally, impacting resources but isn’t the main cause of large memory differences. So basic math says it's impossible to reduce 3.8GB to 8 MB unless you completely rewrite old monkey code.

Extra JVM threads, buffers, and helper processes add more memory usage, totaling around 150–250 MB of JVM overhead, factoring in GC, JIT, and other processes. So, in large apps (3.8GB of memory), optimized JVM overhead remains relatively moderate. Believe me – I once worked on a project where JVM overhead was a problem due to being deployed on an edge system so needed to do all that math. And we effectively used GraalVM, not Rust, to solve it.

Such drastic memory reduction, as described in the article (from 3.8 GB to 8 MB), suggests that excessive memory use is due to unoptimized code rather than the JVM itself. The migration results highlight significant differences in memory and CPU usage, but the author doesn't mention the Java version or frameworks used before migration. Comparing old, outdated Java to Rust creates an uneven basis for comparison, as results could reflect runtime environment differences rather than technology alone.

Additionally, the article skips alternative optimization options in Java, such as tuning JVM parameters, modern memory management, or new design patterns. It's also unclear what testing methodology was used, casting doubt on the results.

PS: Not attacking anybody, just curious. I'm sincerely curious to learn the rest of the story, Gilang Kusuma J.

PS2: Huge congratulations on migration - I know these are hard 🙇

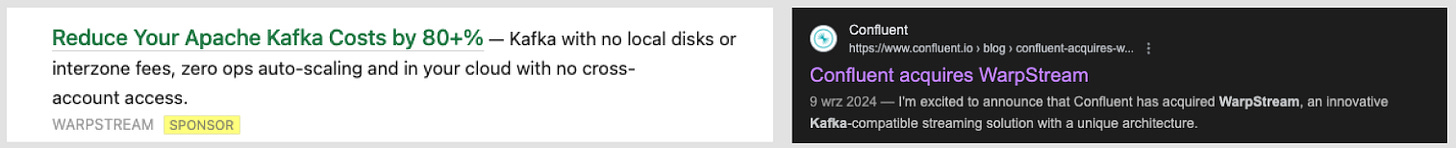

And I'm not going to suggest that Java doesn’t fall behind in some areas. Take the Kafka vs WarpStream comparison (Kafka in Java vs WarpStream in Go). Released in 2022, WarpStream offers a new-generation data streaming platform, providing a more flexible, efficient alternative to Apache Kafka. Written in Go, WarpStream creates binaries with lower memory usage and faster startup times than Java-JVM combinations.

As a result, WarpStream uses system resources more efficiently, enhancing scalability and performance. Go also supports better concurrency, enabling WarpStream to handle multiple data streams smoothly, reducing latency, and increasing throughput. This likely explains why, in September 2024, Confluent (the company behind Kafka) announced its acquisition of WarpStream. Confluent aims to expand its “Bring Your Own Cloud” (BYOC) solutions, allowing clients to run streaming services in their cloud environments, but acquiring potential competitors while it was still reasonably cheap is also likely a sound business decision.

The days of every modern database and system needing max efficiency being Java-based may be behind us - Rust is here as well. But honestly, throwing around numbers like 486x more efficient is ridiculous.

Back to the original article, the cost and difficulties of migration, such as the time needed to learn the new language and team training, which may increase deployment costs for Rust, were also omitted.

In the article Rewrite it in Rails, the author shares their experience building a customs declaration app initially in Ruby on Rails. Rails allowed quick creation of a fully functional system, but due to Ruby limitations, the author migrated to a more advanced stack with Rust and SvelteKit. Despite initial enthusiasm, migration proved to be time-consuming and required extensive infrastructure without directly benefiting users. Much time went to building core functions like database management and authorization instead of enhancing business features.

In the end, the author returned to Rails, focusing on quick delivery to users. Rails, with its rich ecosystem and integrated tools, was more efficient, especially for a rapidly evolving app needing quick feature additions. Migration was challenging, but Rails avoided complex helper code and provided scaling tools. The lesson learned was that building an app requires thoughtful trade-offs – Rails, despite its flaws, enables focusing on the product, not building infrastructure from scratch.

And of course, this is as anecdotal as the Rust praise. I still thought this perspective was worth sharing. Truthfully, I’d love to try Rust practically sometime.

2. What's with the layoffs at Oracle? Who the heck knows 🤷

In section two, we’ll dive into everyone’s "favorite" topic of recent years: layoffs. Interesting, given it affects one of the key companies in the Java community. I wanted to touch on this to highlight the selective reporting that happens around layoffs.

According to channelfutures.com, (TBH, first time hearing about it, but they have more subscribers than this newsletter, so I won’t question their authority) Oracle is undergoing another round of layoffs, mainly impacting Oracle Cloud Infrastructure (OCI). As always, Hacker News and forums like thelayoff.com have picked up on this, with comments suggesting hundreds of employees lost their jobs, including some key positions. Exact numbers remain uncertain, but OCI seems significantly affected, and some workers noted that the process was quick, with no chance to transfer to other roles in the company. Layoffs affected employees from recent grads to experienced managers and coincided with Oracle’s partnerships with cloud leaders AWS and Google Cloud, allowing it to offer database services on these platforms. Experts speculate that Oracle, the fourth largest cloud provider, may be optimizing resources before implementing new strategies with these tech giants.

Does this mean Oracle is falling apart as a company? I don’t have insider info, so I can’t say, but I feel a cognitive dissonance.

Just last week, I read State of the Software Engineering Job Market in 2024 by Gergely Orosz, whose Pragmatic Engineer is Substack’s most popular blog. Gergely analyzed trends in the software engineering job market, highlighting companies that are hiring and downsizing. Surprisingly, Oracle stands out as a leading recruiter, alongside Amazon and TikTok/ByteDance. Oracle’s focus on hiring senior positions reflects its goal of strengthening cloud infrastructure, a key growth driver. With a target of $100 billion annual revenue by 2029, Oracle’s recruitment aligns with Amazon’s strategy – heavy hiring despite high turnover from return-to-office policies.

The article also contrasts examples of companies that have reduced hiring, such as Intel, Klarna, Salesforce, and Spotify, often due to challenging market conditions and lower demand. While Oracle and others (e.g., Amazon, NVIDIA) focus on aggressive expansion and new products (Oracle with AWS and Google Cloud partnerships, NVIDIA leveraging AI), others optimize staffing without replacing departing employees. Orosz’s article shows how different sectors adjust hiring strategies.

I’m not here to defend any particular corporation, but I wanted to point out the informational noise. Two texts paint different pictures of Oracle’s cloud business, and honestly, moments like these give me whiplash. Maybe it’s time to start reading stock reports and “doing my own research.” And I guess, like with Rust earlier, I’m in a bit of a debunking mood this week.

3. Beyond LLMs - low-level AI in Java

Finally, let’s talk a bit about AI on the JVM - my latest passion. Recently, an article on the official Java blog was published that overlaps quite a bit with my Devoxx talk this year but in written form and with a deeper dive.

Advancing AI by Accelerating Java on Parallel Architectures provides a deep dive into the latest platform enhancements, broadening AI capabilities, with a focus on parallel processing support. Java, often associated with “enterprise” apps, is gradually entering an era where it can finally effectively use less conventional hardware and hardware-accelerated architectures.

The article provides a bird’s eye view of ongoing projects. For example, it highlights Vector API, likely familiar to many (currently in its eighth incubation phase), allowing Java developers to use SIMD (Single Input-Multiple Data) instructions for parallel data processing, significantly improving performance on large datasets.

Another initiative discussed is Project Babylon, with Code Reflection that transforms Java code into formats executable on GPUs, expanding Java’s hardware support.

The Panama Foreign Function and Memory (FFM) API’s role is also highlighted, simplifying interactions between Java and native libraries and managing off-heap memory. FFM overcomes traditional JNI limitations, simplifying memory management and enhancing data transfer between JVM and hardware accelerators like GPUs. It provides abstractions for native memory handling, crucial for seamless GPU kernel integration outside Java’s heap.

Don’t you feel these topics form a cohesive picture? Java’s creators do, so they’ve tied them together with a high-level concept – the Heterogeneous Accelerator Toolkit (HAT). Its goal is a unified programming model for Java on GPUs and FPGAs, using Code Reflection and FFM. HAT simplifies GPU programming patterns and kernel calls, enabling Java to directly use parallel processing resources.

You can learn more about HAT in the video from the latest JVMLs:

And before you ask why we don’t just call Python models via API – we could, but without the usual “Java is for enterprise, requiring tight integration with JVM systems and long-term stability,” here’s a better answer – where’s the fun in that?

However...

I found the discussion about Java and Rust very interesting. While I recognize that you had multiple possible reasons to explain the numbers, I think it is also fair to say these reason apply to most Java applications in use today: 1) bloated by bad design and frameworks 2) have unoptimized code 3) are old and out of date and 4) starting over allows you to address all the bad choices you made before. To be fair, it's not unreasonable that people in similar circumstance to get order of magnitude efficiency returns switching to Rust. I know I am on the JVM weekly newsletter, so it might be heresy to say so -- I actually don't doubt their shocking numbers. (They seem extreme, but it gets us talking.) There's more at play here than just an indictment against Java. We are seeing backlash in the industry right now regarding library proliferation - which saves time and money - but also commonly lowers product quality. (As an aside, I think it's only a matter of time before we start paying for libraries that make security a priority.) Going back to basics, where you have to think about efficiency -- in this case Rust -- has a lot of mileage as well. First, developers came for our hard drive space with library bloat. Then, they started wasting CPU time. Finally, we gave them Gigabytes of memory. No one thinks about efficiency anymore. I honestly wonder, because I started coding in 80 column, how many new programmers know how to design or code an algorithm? These numbers seem to me a measure of the industry as a whole. For the record, I still program in Java. I'm not an advocate for Rust. But I often marvel about how much control over my App I give to frameworks every single day.