The road to AWS Lambda SnapStart - guide through the years of JVM "cold start" tinkering - JVM Weekly #24

Some innovations are unimpressive until you put them in their proper context. Such is the case with AWS Lambda SnapStart.

Well, I thought that after last week's issue - entirely devoted to Spring - today I would take on some smaller topics (we've accumulated a couple of interesting releases, e.g. the new Gradle, but not only). "Unfortunately" AWS re:Invent, Amazon's biggest annual event, is taking place. Usually, for the JVM world, it is of marginal importance, but one of the announcements is interesting enough that I decided to once again use the whole edition to cover a single topic...

After all, Amazon showed AWS Lambda SnapStart - which is a Lambda-dedicated solution to the Cold Start problem. That's why today we're going to talk about Serverless.... and why it's been so hard over the years to use it effectively with Java

What is the "Cold Start Problem"?

I assume that by reading this text you know what Serverless functions are. I think that at least AWS Lambda has already entered the programming mainstream. For the sake of argument, however, we are talking here about the Holy Grail of software decomposition - a platform within which we separately scale not monoliths, not services, but individual functions. By operating with artifacts that realistically have a single input/output, we get (alert: huge oversimplification here) two big advantages.

First, the payment model - we pay per use of a specific function, which means a level of auto-scaling unavailable in other models. No traffic -> no costs.

Second - no maintenance overhead. It's the runtime environment vendor that worries about having the computing power waiting for each new client using your application.

Not to get too cute, since there were two advantages it's time for two disadvantages. First, the decomposition to so atomic components makes it really hard to reason about and manage such an application without the right tooling. Second (and this is what we will focus on in this text), serverless applications are characterized by the so-called "cold start" problem. You know that applications written in Java - before they can even "accept" traffic - usually need some time to start up. This is related to the JVM's operating model, and we will gradually walk ourselves through the various aspects of that initialization. For the moment, however, the important thing for us is that firing up a "cold" JVM for the purpose of running a single function is an unacceptable solution in terms of the delays that would be involved. Knowing all that, now it's time to look at how it's even possible that there are some Serverless functions written in Java.

As if you haven't seen enough, in fact, the Internet at one point was even flooded with texts on serverless - I have a feeling it's every Content Marketer's beloved topic. However, the pros and cons of this architecture are well described, for example, by Cloudflare in its Why use serverless computing? Pros and cons of serverless.

How Java has so far tried to deal with it

AWS Lambda native solutions

It's not that the mentioned Cold Start completely eliminates the JVM from the AWS Lambda environment. First of all, we should keep in mind that the Cold Start only applies to selected Lambda calls. Lambda functions can remain warmed up for some time after the initial startup. Their runtime, once started, is shared between executions. After some period of inactivity, the runtime is "killed", but there are ways to keep it alive.

Amazon itself has historically shared ways to squeeze maximum effect out of Java Lambdas in the publication Optimizing AWS Lambda function performance for Java. It is pointed out particularly useful to properly set the compilation level to one that provides the maximum achievable effect in a short period of time. You can achieve that by setting the environment variable JAVA_TOOL_OPTIONS to "-XX:+TieredCompilation -XX:TieredStopAtLevel=1".

(Application) Class Data Sharing

However, it's not like we've been left to the mercy of "generic" solutions provided by Amazon (like maintaining warmed-up runtime environments) or have always been in the JVM (like Tiered Compilation). On the contrary, the JDK developers understood very quickly (already around JDK 9) that the serverless niche was strongly escaping them, so they began to ensure that the language runtime became as Cloud-Native as possible. That's why the so-called AppCDS - Application Class Data Sharing - appeared in JDK 10, which I consider the first attempt for such optimization.

What is AppCDS? This functionality builds on the Class-Data Sharing that has existed since JDK 1.5. Indeed, it's not that it was only in the era of Serverless that we started to worry about the runtime of our applications - it's just that the fruit used to hang a little lower in the past. After all, every time the JVM is started, it is necessary to load the classes included in the language runtime - e.g. the java.lang package, but not only that. These remain virtually the same regardless of the process, so instead of having to deal with their initialization each time, you can dump the end result to disk as an archive and be able to restore it later. Initially, this applied only to Java internal classes. However, with JDK 10, the mechanism was extended to classes created by application developers as well. This optimization is perfectly in line with the recurring process of cloud releases, although more in the case of "regular" servers than Lambda functions. Still, it laid out the path the future improvements will use as well - caching.

You can learn more about CDS and AppCDS from dev.java, where they are described those two in an accessible way. A very good publication on the subject of practical uses of Application Class Data-Sharing, on the other hand, was created by Nicolai Parlog.

Class Pre-Initialization

Here more in the form of curiosity, but I couldn't help myself to not include that one. This September, Alibaba announced a joint initiative with Google - the FastStartup Incubator project, an attempt to use Class Pre-Initialization for cold start optimization. As I mentioned in the previous section, most cold start optimization methods rely on some form of cache. The problem with caching, however, is that in order to be able to use it in a safe way, it is necessary to be sure that the class does not have some side-effects. With this information, we are potentially able to make more aggressive optimizations, but without some hints, automatic tools are in the woods. For this reason, the existing JVM Pre-Initialization mechanism for classes can currently only be used for a strictly defined list of classes.

That's why both companies want to create jdk.internal.vm.annotation.Preserve annotation and/or bring the ability to pass a list of classes that can be safely pre-initialized. This will make the optimization methods present in Java more widely applicable. FastStartup Incubator is in its early stages, but both Google and Alibaba - as companies having their own clouds - are highly motivated (💵) to push it forward.

Native images - Project Leyden, Ahead-of-Time Compilation, GraalVM

Since it takes so much time to initialize classes and classpath, maybe it's best to just get rid of it and put everything into the binaries right away during the compilation?

Following this line, another method often used in the serverless world today is to use Custom Runtime Lambda and GraalVM to create native images. Such are characterized by the fact that full performance is achieved from the moment of the startup, and the launch time is greatly reduced in them compared to standard Java applications. On the Internet, you will find many texts dedicated to this approach, e.g. it is well described by Arnold Galovics in his text Tackling Java Cold Startup Times On AWS Lambda With GraalVM. If you've ever been interested in cold start optimization in Java, this is probably the method you've heard about.

The problem with AoT compilation is that while it allows a certain baseline level of performance to be achieved, the process is not as optimal as one based on Just-In-Time compilation. This is because the application already running is able to make optimizations specific to how it is used - and it does so based on information that a compiler running statically simply does not have access to. This would probably be swallowable for Serverless, but GraalVM (and AoT as a whole) also requires users to change their own habits, especially when it comes to using the Reflection API, for example.

I think that is enough about AoT, because this optimization method remains firmly out of the scope of this issue and I put it here mainly for the sake of order. You can read more about the above in one of the previous issues of JVM Weekly: How committing GraalVM to OpenJDK changes the rules for Project Leyden.

CRIU

We're wrenching a bit, but we're slowly getting closer to the point. Methods like Fast Startup and AppCDS were used to cache individual portions of the JVM runtime. Checkpoint/Restore in Userspace (CRIU), however, is a Linux feature that allows you to take a "snapshot" of an entire running application process and dump it to the disk. Then, the next instance can be started from the point where the said dump was taken, reducing e.g. startup time (but not only).

Do I have any retro gamers among my readers? If so, the whole thing works in a similar way to the Save State functionality in emulators. Methods such as AppCDS resemble classic saving - we select those fragments that will allow us to recreate the state of the application later, and only save them. Save State does not play with such finesse - as computers have moved forward and we have more disk space, we simply dump the entire memory state to disk and then recreate 1:1 for ourselves when needed.

CRIU has its own problems - both from a security and convenience point of view - because we are talking about a generic operating system function outside the JVM. In turn, each application has slightly different characteristics. Depending on whether we're talking about an application server, a web application, or a batch job, writing to disk should take place at a different point in time. It can be difficult to grasp without the context of the virtual machine. As OpenLiberty writes in its Faster start-up for Java applications on Open Liberty with CRIU:

It would be useful to have an API so that the application can specify when it would like a snapshot to be taken; this would be a valuable addition to the Java specification.

Well, now it's high time to move on to CRaC!

PS: If you want to learn more about CRiU, RedHat's Christine Flood recorded an in-depth talk CRIU and Java opportunities and challenges about the usage of this technology in Java.

CRaC

Well, we come to the final path of our JVM optimization journey. This is because CRaC (Coordinated Restore at Checkpoint) is the aforementioned CRIU API OpenLibert was asking for. It allows you to create a "checkpoint" - given memory dumps - at any point in the application's operation as defined by the software developer, using a command:

jcmd target/spring-boot-0.0.1-SNAPSHOT.jar JDK.checkpointIt also allows you to handle how the application should behave both when a checkpoint is created and restored, so it allows you - as a CRaC API user - to manage its state.

import jdk.crac.Context;

import jdk.crac.Core;

import jdk.crac.Resource;

class ServerManager implements Resource {

...

@Override

public void beforeCheckpoint(Context<? extends Resource> context) throws Exception {

server.stop();

}

@Override

public void afterRestore(Context<? extends Resource> context) throws Exception {

server.start();

}

}

In the context of this text, the genesis of CRaC is important as well. This is because, from the very early stages, Amazon engineers from the Corretto team (their JDK implementation) were involved in it. So the OpenJDK mailing list had been leaking for some time that Amazon was working on improving support for Java in Serverless applications. How does AWS want to use CRaC then? Here's a look at how AWS Lambda works underneath.

How is AWS Lambda structured?

There are a great many projects that attempted to simulate the AWS Lambda (and other serverless) experience within, for example, Kubernetes. In fact, however, it was very difficult for them to provide an experience even close to that provided by Amazon's product. This is because instead of using generic containers, AWS relied on a dedicated, serverless-optimized runtime environment. It is from this approach that most of Lambda's proposed innovations, which are so difficult for generic solutions to replicate, stem from the company.

Lambda's runtime consists of a mass of strictly specialized components. For example, EC2 Nitro instances are used as infrastructure. These are bare-metal machines devoid of virtualization overhead (as would be the case with T-type EC2s, which are most often used for such Elastic Container Storage). The Firecracker project - specialized micro virtual machines designed for serverless applications - runs on them. They provide process isolation and are able to have a common runtime context. Thanks to this, Amazon is able to ensure that after the initial warm-up of the first instance, subsequent instances will already start up much faster. Firecracker, on the other hand, manages in a granular way which fragments should be reused and which must be created from scratch each time.

Once again - everything above is oversimplification just for context because it is an overview for JVM developers, not a publication for architects of their own Serverless cloud. However, if you are rowdy for more details - Behind the scenes, Lambda is the publication you are looking for.

So... how SnapStart in the end works?

That being said, since we have all the pawns already on the board, let's finally talk about the latest innovation from Amazon, which is AWS Lambda SnapStart.

The execution of each Lambda function consists of three phases - Init (governed by quite specific laws), Invoke and Shutdown. Bootstrapping the environment within the first of these involves preparing the entire environment to a state where it is able to accept traffic. SnapStart saves the state of the lambda at the end of Init and saves it for later when our function will go cold again. It does this using the CRIU, and in the first version, it just supports Java thanks to the CRaC API. The whole thing underneath, meanwhile, is powered by the snapshotting mechanism provided by Firecracker, and the aforementioned CRaC API helps when a snapshot refresh is needed - AWS Lambda provides the corresponding hook.

Of course, there are some challenges to keep in mind here. Standard randomization in Java generates a seed at initialization. Due to that - as we are operating on a memory dump - then all the results of pseudo-random operations will be the same, making java.util.Random rather useless. However, Amazon has made sure that such an e.g. java.security.SecureRandom behaves correctly, but if any of your dependencies use the classic Random....

Another interesting capability provided by SnapStart is the ability to "warm up" a function using the Just-in-Time compiler on an external machine and deliver it to the Lambda function along with the application. This makes it possible to provide them with maximum performance, exceeding that which can be provided by Ahead-of-Time compilation. Certainly, however, the most impressive thing is the reduction of Cold Start. This is because AWS is talking about as much as a 10-fold reduction in launch time. The difference between cold and heated serverless functions thus becomes practically negligible in most cases.

Amazon, of course, provided documentation. The whole thing is enabled by a single flag in YAML, but it is possible to customize the process for your particular needs, e.g. through the mentioned Hooks. A good starting point would be the official introductory post from Amazon.

Finally, some details - who, where, when?

If you want to learn more on the topic, perhaps the best place at the moment to explain the intricacies of SnapStart is Adam Bien's airhack.fm podcast, in which he talks with the author of the above introduction - Mark Sailes, who holds the position of Specialist Solutions Architect for Serverless at Amazon. The conversation touches not only on SnapStart itself but also on other challenges that may be faced by those willing to try the world of Serverless.

Amazon has been cooperating in secret with partners and the first of them - Quarkus - has already showed off the results. Not only did the team working on the project share an extension to enable the use of SnapStart - which will already appear as an experimental version in the next release of Quarkus, version 2.15. In addition, they shared their internal performance metrics, which seem to confirm Amazon's version of a tenfold acceleration. Since the relatively fast-launching Quarkus can boast such increases, I wonder how it will be for such (production, not "hello world" as in the example from AWS) Spring.

A certain limitation for some may be the fact that the whole thing is supported only by Corretto (a distribution of JDK by Amazon), and only version 11. However, this should not scare you. AWS Lambda doesn't support JDK 17 at this point anyway, and since the whole thing runs in a controlled AWS services environment, it wouldn't make sense to make it available for other JDK distributions. However, Amazon does not share whether there have been any modifications to Corretto itself necessary for SnapStart to work.

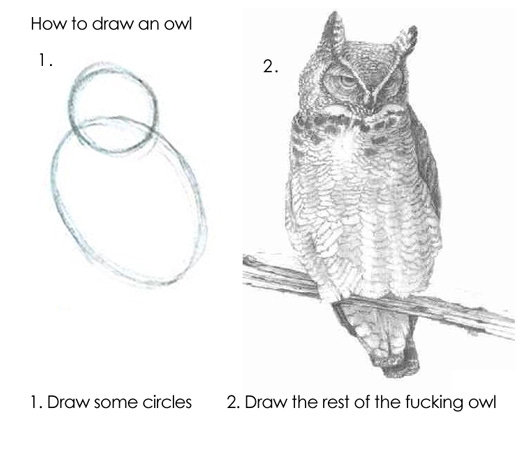

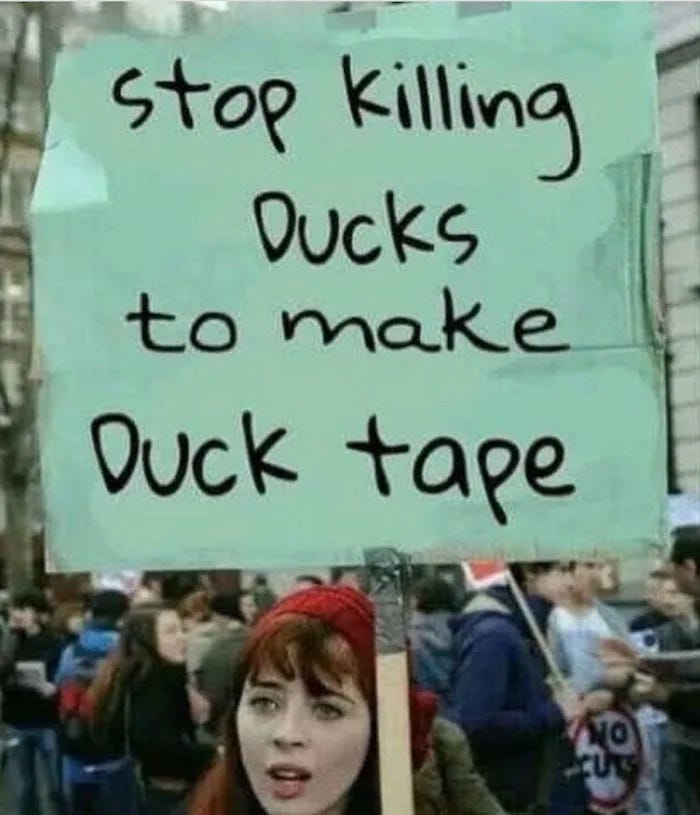

I know this article had a slightly distorted introduction-to-content ratio, but I really wanted to avoid the problem of the following owl. That's why I just took you through the whole thing step by step.

I hope you now have a better understanding of what giants SnapSeed stands on the back of, and what had to happen along the way so that in 2022 Amazon could brag to JVM developers about the new functionality of its Lambda. And in a week's time we'll hopefully sum up the current all-other things happening around because we've accumulated a bit of it - unless the impending JDK 20 Rampdown surprises us with something truly big.